-

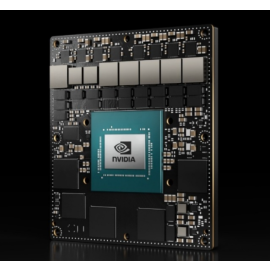

Video card NVIDIA A100 80GB SXM

EAN: 19346The NVIDIA A100 80GB SXM Tensor Core GPU is engineered to accelerate AI, data analytics, and high-performance computing (HPC) workloads. Built on the NVIDIA Ampere architecture, it features 6,912 CUDA cores and 432 third-generation Tensor Cores, delivering up to 19.5 TFLOPS of FP64 performance and 312 TFLOPS of FP32 performance. The GPU is equipped with 80 GB of HBM2e memory, providing a memory bandwidth of up to 2 TB/s, facilitating rapid data processing for complex AI models and large datasets.

-

NVIDIA HGX B300 Blackwell Platform

EAN: 19377The NVIDIA HGX B300 Blackwell Platform is NVIDIA’s most powerful AI computing platform, purpose-built for trillion-parameter AI model training and real-time inference at scale. Featuring 16 Blackwell Ultra GPUs interconnected via 5th-Gen NVLink delivering 1.8 TB/s of GPU-to-GPU bandwidth, it supports up to 2.3 TB of high-speed HBM3e memory.

-

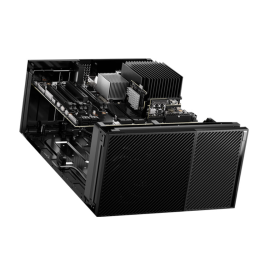

NVIDIA HGX B200 Blackwell Platform

EAN: 19378The NVIDIA HGX B200 Blackwell Platform is a high-performance, rack-scale AI infrastructure solution designed for large-scale machine learning and data center applications. It features NVIDIA Blackwell B200 GPUs, up to 1.44 TB of GPU memory, and advanced networking with 400 Gb/s connectivity. Powered by dual Intel Xeon Platinum processors, it delivers up to 72 petaFLOPS of FP8 training performance.

-

NVIDIA HGX H200 Hopper Platform

EAN: 19379The NVIDIA HGX H200 is a high-performance computing platform designed for AI training and inference, as well as high-performance computing (HPC) workloads. It features the NVIDIA H200 Tensor Core GPU, built on the Hopper architecture. This platform offers substantial improvements in computational power, memory bandwidth, and interconnect capabilities compared to its predecessors.

-

NVIDIA DGX Spark

EAN: 19380The NVIDIA DGX Spark is a compact AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip. It delivers up to 1,000 AI TOPS (Tera Operations Per Second) of FP4 performance, making it suitable for tasks such as fine-tuning, inference, and prototyping of large AI models with up to 200 billion parameters. The system features 128 GB of unified LPDDR5x memory with a 256-bit interface and 273 GB/s memory bandwidth. Storage options include 1 TB or 4 TB NVMe M.2 SSDs with self-encryption

-

NVIDIA DGX Station

EAN: 19381The NVIDIA DGX Station is a powerful AI workstation designed to bring data center-level AI performance to office environments. It features four NVIDIA Tesla V100 GPUs, each with 16GB of HBM2 memory, providing a total of 64GB of GPU memory. The system is powered by an Intel Xeon E5-2698 v4 processor with 20 cores, delivering exceptional computational capabilities. With 256GB of DDR4 system memory and a storage configuration of 3x 1.92TB SSDs in RAID 0 for data and 1x 1.92TB SSD for the operating system, the DGX Station ensures fast data access and processing.

-

NVIDIA IGX Orin 700

EAN: 19382The NVIDIA IGX Orin 700 is an industrial-grade edge AI platform designed for mission-critical applications in sectors such as healthcare, transportation, and industrial automation. It combines high-performance computing with advanced functional safety and security features. Powered by the NVIDIA Orin SoC, it integrates a 12-core Arm Cortex-A78AE CPU and a 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores, delivering up to 1705 TOPS of AI performance.

-

NVIDIA IGX Orin 500

EAN: 19383The NVIDIA IGX Orin 500 is an industrial-grade edge AI platform designed for mission-critical applications in sectors such as healthcare, transportation, and industrial automation. It combines high-performance computing with advanced functional safety and security features. Powered by the NVIDIA Orin SoC, it integrates a 12-core Arm Cortex-A78AE CPU and a 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores, delivering up to 1705 TOPS of AI performance.

-

NVIDIA IGX Orin Developer Kit

EAN: 19384The NVIDIA IGX Orin Developer Kit features a 12-core Arm Cortex-A78AE CPU and an integrated Ampere architecture GPU with 2,048 CUDA cores and 64 Tensor Cores, delivering up to 248 INT8 TOPS of AI performance. It includes 64 GB LPDDR5 memory with 204.8 GB/s bandwidth and a 500 GB NVMe SSD. The kit offers advanced networking with dual 100 GbE ports via ConnectX-7 and supports PCIe Gen5 expansion. It includes USB 3.2 Gen2, DisplayPort 1.4a, HDMI 2.0b, and integrated Wi-Fi/Bluetooth

-

NVIDIA GB200 NVL72

EAN: 19385The NVIDIA GB200 NVL72 is a rack-scale, liquid-cooled AI supercomputer that integrates 36 Grace CPUs and 72 Blackwell GPUs, interconnected via fifth-generation NVLink, delivering 130 TB/s of GPU communication bandwidth. It provides up to 1,440 PFLOPS of FP4 AI performance and supports up to 13.5 TB of HBM3e GPU memory with 576 TB/s bandwidth.

Server – is a device that performs various tasks both remotely (more often), as well as locally. In fact, this is the same computer, only more powerful.. Consisting, like a computer from: processor, motherboard, RAM and hard disk, and most often large servers use equipment special for them. They are used to organize the work of the Internet provider, to store data, for hosting (where all the files posted on this site are stored).

Today they produce various types of servers:

rack (Rack), which are mounted in a rack or server cabinet

Floor servers (Tower) – this type of server is built into the system unit

Blade servers - these are computer servers with components, taken out and summarized in a basket (Chassis) to reduce the space occupied

Management is performed remotely, thanks to the operating system installed on the server in advance. Usually these are Linux or Windows operating systems, but it is worth noting that these are special versions designed to manage the server

Log In