-

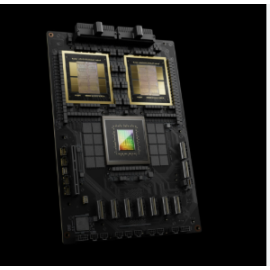

NVIDIA GB200 Grace Blackwell Superchip

EAN: 19386The NVIDIA GB200 Grace Blackwell Superchip integrates two Blackwell B200 Tensor Core GPUs with a Grace CPU via a 900 GB/s NVLink-C2C interconnect. This configuration delivers up to 40 PFLOPS of FP4 AI performance, 20 PFLOPS of FP8/FP6, and 10 PFLOPS of FP16/BF16, with 384 GB of HBM3e GPU memory offering 16 TB/s bandwidth. The Grace CPU, featuring 72 Arm Neoverse V2 cores, supports up to 480 GB of LPDDR5X memory with 512 GB/s bandwidth.

-

NVIDIA GB300 NVL72

EAN: 19387The NVIDIA GB300 NVL72 is a fully liquid-cooled, rack-scale AI supercomputer that integrates 72 NVIDIA Blackwell Ultra GPUs and 36 NVIDIA Grace CPUs. This configuration delivers up to 1,400 PFLOPS of FP4 AI performance, with 21 TB of HBM3e GPU memory providing 576 TB/s bandwidth.

-

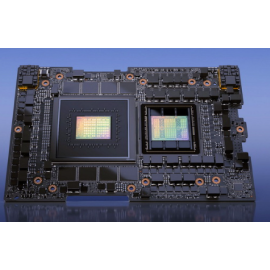

NVIDIA GH200 Grace Hopper Superchip

EAN: 19388The NVIDIA GH200 Grace Hopper Superchip integrates a 72-core Arm Neoverse V2 Grace CPU with an H100 Tensor Core GPU via a 900 GB/s NVLink-C2C interconnect. This configuration delivers up to 4 PFLOPS of AI performance, with 96 GB of HBM3 GPU memory offering 4 TB/s bandwidth and up to 480 GB of LPDDR5X CPU memory with 500 GB/s bandwidth. The GH200 Superchip is designed for large-scale AI and HPC applications, providing a unified memory architecture and enhanced energy efficiency.

-

NVIDIA GH200 NVL2 Grace Hopper Superchip

EAN: 19389The NVIDIA GH200 NVL2 Grace Hopper Superchip integrates two GH200 Superchips via NVLink, combining 144 Arm Neoverse V2 CPU cores and two Hopper GPUs. This configuration delivers up to 8 PFLOPS of AI performance, with 288 GB of HBM3e GPU memory offering 10 TB/s bandwidth and up to 960 GB of LPDDR5X CPU memory. The GH200 NVL2 is designed for compute- and memory-intensive workloads, providing 3.5× more GPU memory capacity and 3× more bandwidth than the NVIDIA H100 Tensor Core GPU in a single server.

-

NVIDIA DGX GB300

EAN: 19390The NVIDIA DGX GB300 is a rack-scale AI supercomputer designed for enterprise-scale AI workloads. It integrates 72 NVIDIA Blackwell Ultra GPUs and 36 Grace CPUs, delivering up to 1,400 petaFLOPS of FP4 AI performance. The system features 20.1 TB of HBM3e GPU memory and a total of 37.9 TB of fast memory. Each GPU is connected via NVIDIA ConnectX-8 VPI networking, providing 800 Gb/s InfiniBand connectivity.

-

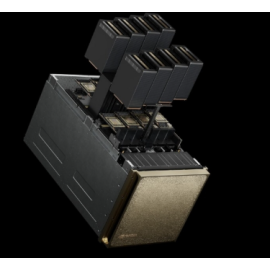

NVIDIA DGX B300

EAN: 19391The NVIDIA DGX B300 is a next-generation AI system powered by NVIDIA Blackwell Ultra GPUs, delivering up to 72 PFLOPS of FP8 performance for training and 144 PFLOPS of FP4 for inference. It features 2.3 TB of total GPU memory, dual Intel Xeon processors, and high-speed networking with 8x ConnectX-8 VPI and 2x BlueField-3 DPU interfaces supporting up to 800Gb/s.

-

NVIDIA DGX GB200

EAN: 19392The NVIDIA DGX GB200 is a cutting-edge, rack-scale AI infrastructure designed to handle the most demanding generative AI workloads, including training and inference of trillion-parameter models. Each liquid-cooled rack integrates 36 NVIDIA GB200 Grace Blackwell Superchips—comprising 36 Grace CPUs and 72 Blackwell GPUs—interconnected via fifth-generation NVLink to deliver up to 1.8 TB/s of GPU-to-GPU bandwidth.

-

NVIDIA DGX B200

EAN: 19393The NVIDIA DGX B200 is a high-performance AI system designed for enterprise workloads, integrating eight NVIDIA B200 GPUs with a total of 1,440 GB GPU memory. It delivers up to 72 petaFLOPS for training and 144 petaFLOPS for inference. The system features dual Intel Xeon Platinum 8570 processors, up to 4 TB of system memory, and advanced networking capabilities with NVIDIA ConnectX-7 and BlueField-3 DPUs.

-

NVIDIA DGX H200

EAN: 19394The NVIDIA DGX H200 is a state-of-the-art AI system engineered for large-scale generative AI and high-performance computing (HPC) workloads. It integrates eight NVIDIA H200 Tensor Core GPUs, each equipped with 141 GB of HBM3e memory, totaling 1,128 GB of GPU memory. This configuration delivers up to 32 petaFLOPS of FP8 performance. The system is powered by dual Intel Xeon Platinum 8480C processors, offering 112 cores in total, and supports 2 TB of DDR5 system memory.

-

NVIDIA Workstation RTX PRO 6000 Blackwell Workstation Edition

EAN: 19395The NVIDIA RTX PRO 6000 Blackwell Workstation Edition is a state-of-the-art professional GPU engineered to meet the rigorous demands of AI development, large-scale simulations, and advanced creative workflows. Built on the groundbreaking NVIDIA Blackwell architecture, it features 96 GB of ultra-fast GDDR7 memory, delivering up to 4000 TOPS of AI performance, 125 TFLOPS of single-precision compute, and 380 TFLOPS of ray tracing power.

Server – is a device that performs various tasks both remotely (more often), as well as locally. In fact, this is the same computer, only more powerful.. Consisting, like a computer from: processor, motherboard, RAM and hard disk, and most often large servers use equipment special for them. They are used to organize the work of the Internet provider, to store data, for hosting (where all the files posted on this site are stored).

Today they produce various types of servers:

rack (Rack), which are mounted in a rack or server cabinet

Floor servers (Tower) – this type of server is built into the system unit

Blade servers - these are computer servers with components, taken out and summarized in a basket (Chassis) to reduce the space occupied

Management is performed remotely, thanks to the operating system installed on the server in advance. Usually these are Linux or Windows operating systems, but it is worth noting that these are special versions designed to manage the server

Log In